I usually rely on Infrastructure as Code to build my solutions - usually, not always. In my AWS Playground account that I use for demos and exploration, there are some resources that I’ve created manually over the years and forgot to delete.

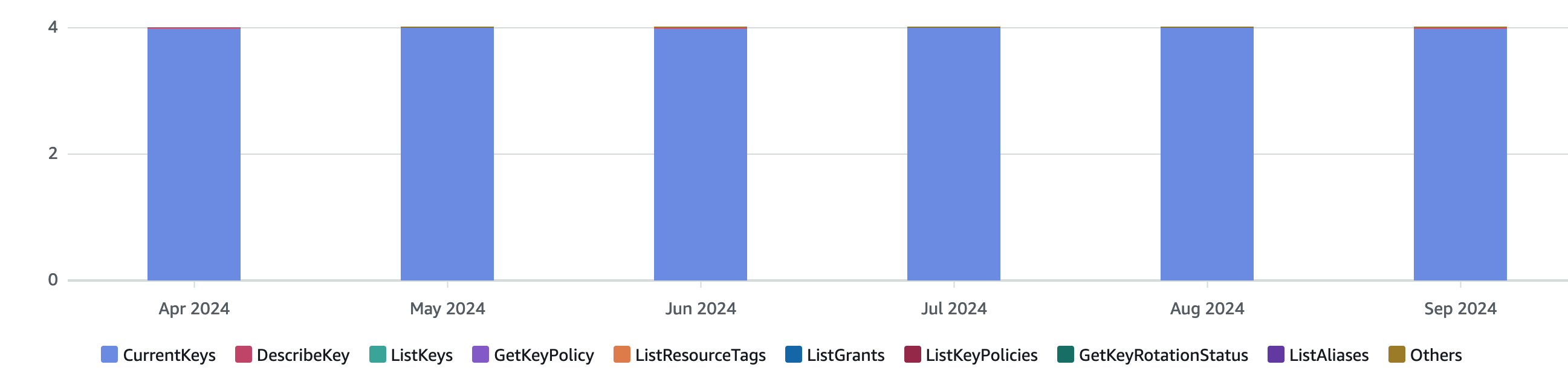

I check Cost Explorer at least once per month to identify resources that could be turned off, and this time, I decided to take a closer look at my KMS charges. They’re nowhere near exorbitant at slightly more than $4 per month, but given that barely anything is running in this account, $4 ends up being a significant fraction of the bill.

At this point, I should probably point out that there are opportunity costs involved when I spend time optimizing a monthly $4 charge. If it was only about that, this probably wouldn’t break even in quite some time. Since I use this as an opportunity to educate myself (and potentially you) about approaches to tackle these kinds of issues, which expands my skills for real projects, I’m willing to spend a bit more time on that.

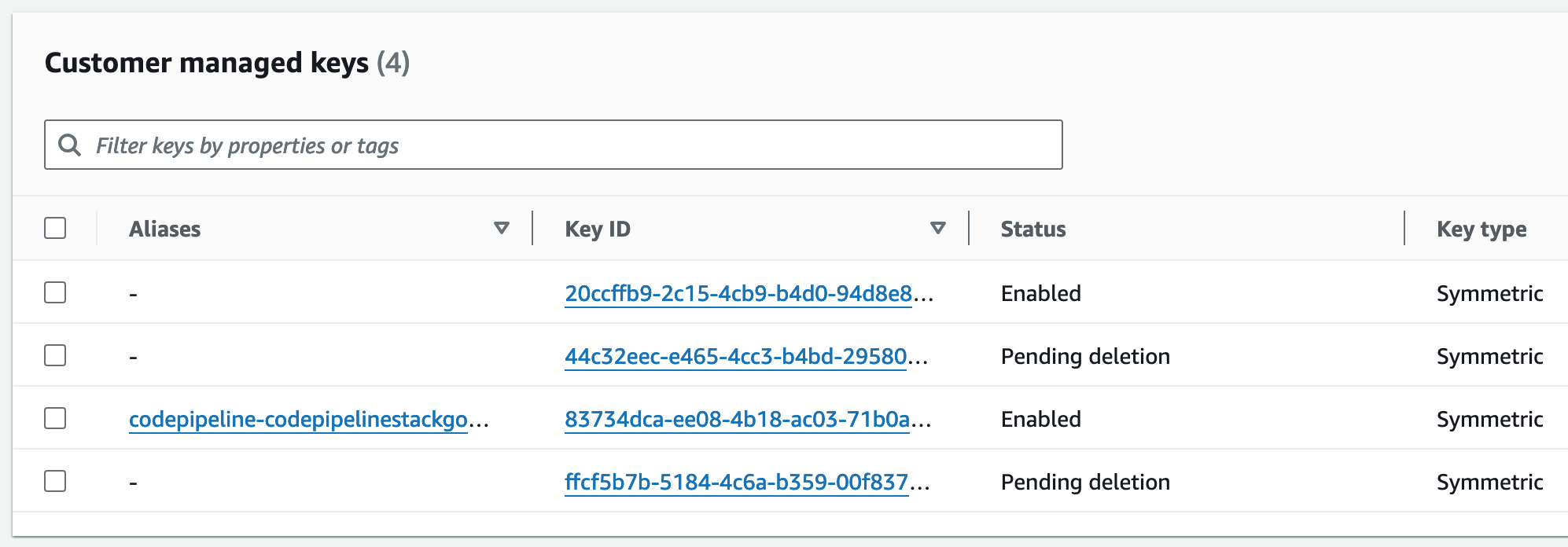

The lion’s share of the $ ~4 / month is the $1 per month that AWS charges per Customer Managed Key and since I have precisely four of those, this accounts for the costs aside from a couple cents for API calls. Now the question is: what are these keys being used for? Taking a look at the console reveals only Key IDs and Aliases, although individual keys can also have a description. (This screenshot is post-cleanup; I forgot to take one in advance)

While there’s a description field, whoever created these (me) never bothered to add any text. Fortunately, two had aliases defined, which allowed me to identify the stack they belonged to, and I was able to schedule one of them for deletion (as well as clean up a few other resources). The other key with an alias is still being used, and this leaves us with two unaliased keys. Sometimes, the resource policy on the key or any tags they might have attached can help us figure out what their purpose is, but in my case, none of them were insightful.

This leaves me with two questions to answer before I can consider deleting the keys:

- Are these keys actively used?

- Are there resources where the keys are part of the configuration?

To answer my questions, I considered a few data sources and tools:

- AWS Config as the central repository to track changes to my resources

- AWS CloudTrail, which provides an audit trail of all, well most, API calls

- IAM to identify references to these specific keys in policies

- Steampipe, which can be used to query resources using SQL

Additionally, I considered the time-honored scream test, where you just shut things off and wait for someone to scream. However, this being my own playground environment with less-than-ideal monitoring led me to discard this option. If you’re the only potential screamer, it’s less fun.

AWS Config

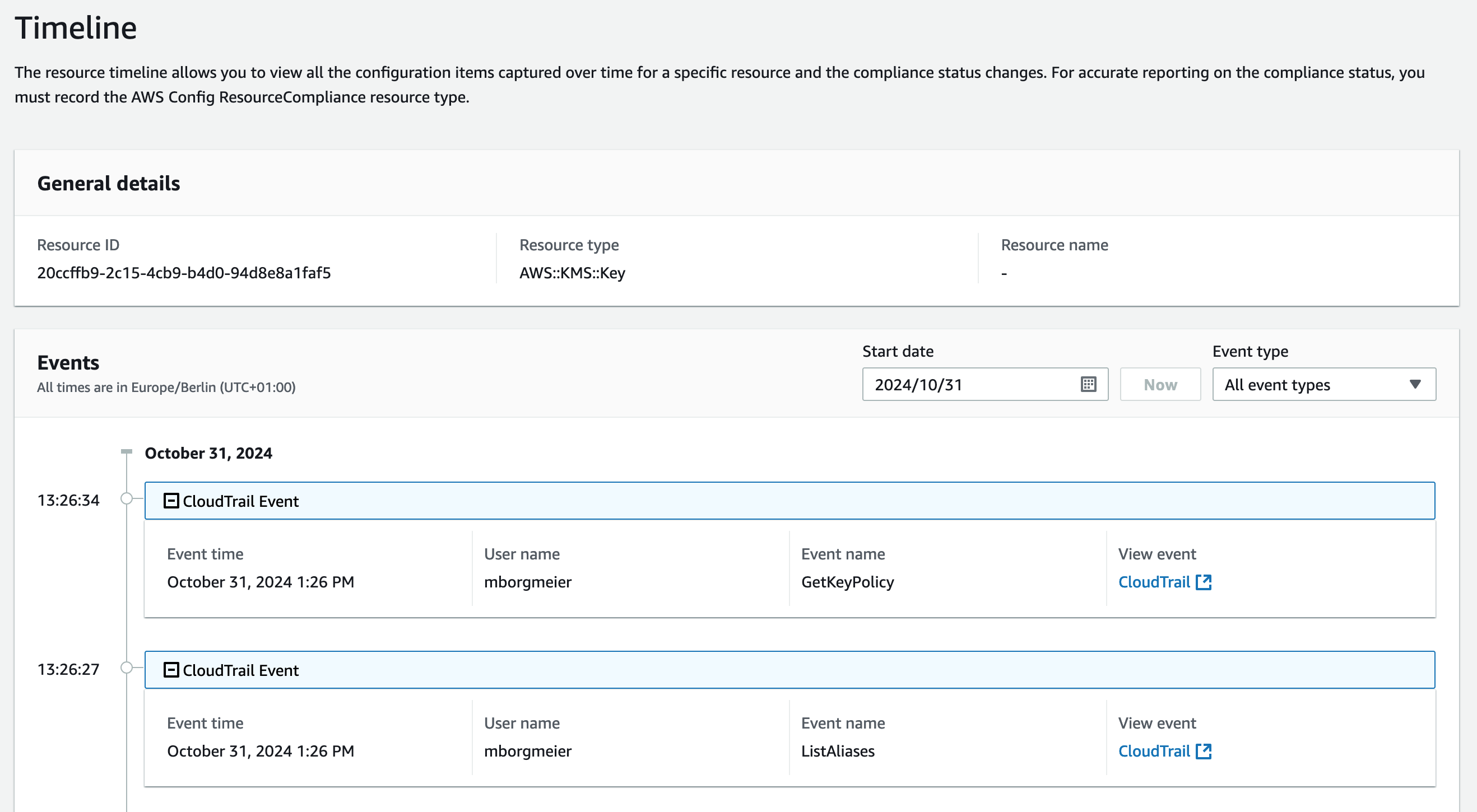

Searching for the key by its ID in AWS Config, led me to the resource timeline for the key, which in turn has an integration with CloudTrail.

I could see that there had been no config changes to the key in a while and that there’s some kind of API activity that CloudTrail reports on. Unfortunately, there was so much noise where the IAM Access Analyzer (not useful for this question) and AWS Config itself were accessing the key that this wasn’t very useful. A view of the connected resources, i.e., those that reference the current resource, would have been handy here, but there is none.

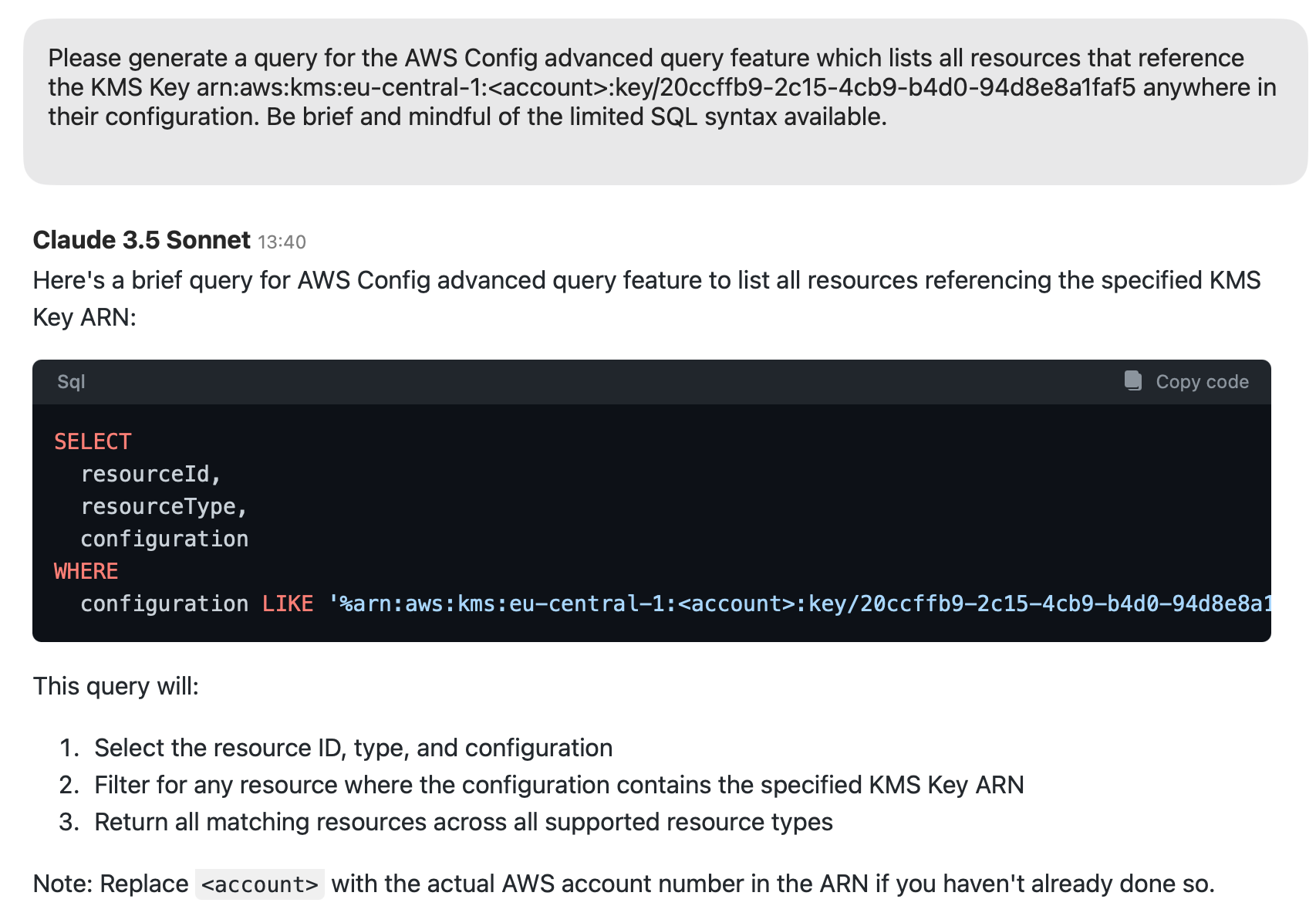

Fortunately, there is the advanced query feature that allows you to select resources using SQL. For the data schema, the console just features a link to Github where you can learn a bit about the attributes and data types. Some visual explorer would have been nice here. Since I wasn’t too excited about looking through literally hundreds of JSON files to get the schema, I asked Claude 3.5 to help.

Naturally, this failed because, for some obscure reason, the like syntax doesn’t support a wildcard character at the beginning of the query.

Wildcard matches beginning with a wildcard character or having character sequences of less than length 3 are unsupported

When I prodded Claude more about that, it spit out SQLs with many OR conditions for all possible parameter names and filters to resources I didn’t ask for. I was a bit annoyed; I wanted it to treat all configuration options as strings and just find an ARN in there (Amazon Q wasn’t helpful here, either). Looking at the documentation for advanced queries leads me to believe that my use case is clearly too advanced and, thus, not supported. Is searching for connections between resources such an obscure requirement?

AWS CloudTrail

Disappointed by AWS Config, I decided to check CloudTrail. I was confident that this would at least allow me to figure out if something was actively using the key. Since the in-console event history is limited to 90 days of management events, I created an Athena table that allowed me to query the raw data in S3 since the beginning of time - a bit more than five years when this account was created.

SQL with nested data structures is always a bit finicky, so I tried to give Claude another chance to redeem itself and was (predictably) disappointed. Some good old-fashioned googling about properly unnesting arrays yielded the following query, which lists the API calls where the KMS Key in question is the target, which user agent performed them, how many times, and when the last call happened.

SELECT eventsource

, eventname

, count(*) as n_requests

, max(eventtime) as latest_request

, useragent

FROM my_athena_table,

UNNEST (resources) as t(resource)

WHERE 1=1

AND resource.arn = 'arn:aws:kms:eu-central-1:<account>:key/<key-id>'

GROUP BY eventsource, eventname, useragent

ORDER BY eventsource, eventname, useragent

The query took some time to run because Athena isn’t optimized to work with thousands of compressed tiny json files, but after a bit more than two minutes, I got my result. That’s how I found out that earlier this year, I had been using the key for some Textract encryption experiments, and since then, there had only been Describe* operations. This means it was most likely fine to delete the key. Unfortunately, CloudTrail couldn’t really help me figure out where the key is currently configured, as not all parameters and responses are fully captured (size constraints).

AWS IAM / Steampipe

Last, but not least, I’m going to try using Steampipe to query AWS resources using SQL. Steampipe makes AWS resources available as tables, and I could use the following statements to find out which customer-managed policies and inline policies reference the key.

-- Find customer-managed policies that reference the key

select *

from aws_iam_policy as p

where p.policy::text like '%arn:aws:kms:eu-central-1:<account>:key/<key-id>%'

-- Find users with inline policies that reference the key

select *

from aws_iam_user

where inline_policies::text like '%arn:aws:kms:eu-central-1:<account>:key/<key-id>%'

-- Find roles with inline policies that reference the key

select *

from aws_iam_role

where inline_policies::text like '%arn:aws:kms:eu-central-1:<account>:key/<key-id>%'

This is how I found out that I may have created the key using terraform, because a few roles showed up that had clearly been create through terraform, but none of those things are still in use.

Unfortunately, you can’t really use Steampipe to figure out all the places that reference the key in a practical manner, as it relies on API calls to AWS, and you’d be querying every AWS service. The service that already has all of this information in one spot is AWS Config. Unfortunately, its SQL feature is severely limited. It’s possible to export an AWS Config snapshot to S3 and query that using Athena if you want to jump through a couple more hoops, but this is where I decided that I had enough info to delete the KMS Key.

In about a week I’m going to have only two keys - the one that’s still in use and another one for Demos, this time with a description and tags.

What can we do to avoid getting in this position in the first place? The first step is tagging your resources. Not all resources support it, but most do. You can start by adding an application tag, allowing you to view the resources as a group in the resource explorer. Also, Infrastructure as Code will usually help you. The tools make it easy to apply tags to all resources (you still have to configure it, though), and this gives you a much better insight into what’s going on.

In my case, part of the resources were created through IaC, so clearly, this is not a silver bullet; you still have to do it properly. On a related note - if someone has seen my Terraform state and code for that experiment, please let me know.

Conclusion

In conclusion, we learned about a few tools that we can leverage to identify who or what is using or referencing our resources, which may help with cost optimization or at least allocation. Since this process is a bit annoying after the fact, the old saying “an ounce of prevention is worth a pound of cure” holds true.

— Maurice

I considered calling this blog “How I slashed my KMS bill by 50% “but decided against it because, technically, it’s a bit less than 50%.