Occasionally, you’ll do something that you think you’ve done dozens of times before and are then surprised it no longer works. While setting up a log delivery mechanism for Splunk, I had one of these experiences again. (Feel free to replace relearning with forgetting in the headline.)

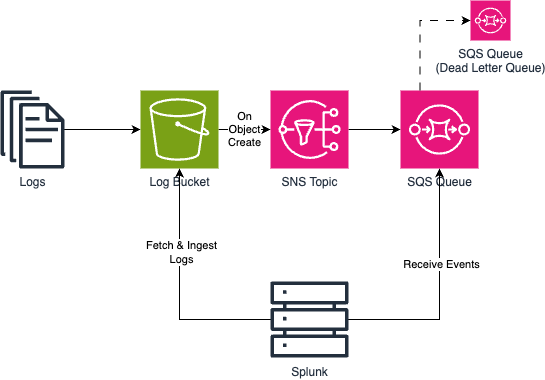

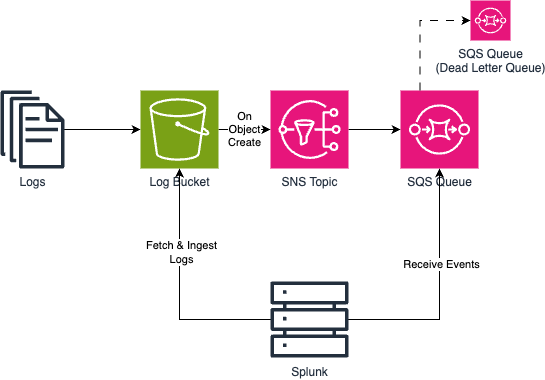

Splunk’s preferred method of ingesting log data from AWS is the SQS-based S3 input. In a nutshell, you ensure that all logs end up in an S3 bucket. That bucket is configured to send all object create events to an SNS topic (so that multiple systems can subscribe), to which an SQS queue is subscribed. Splunk subsequently consumes the object create events from the queue and ingests the corresponding objects from S3.

That’s straightforward - until you add encryption to the mix. Per the customer’s policy, queues and topics need to be KMS-encrypted. Having configured and deployed it that way in Terraform, I was surprised that nothing was showing up in Splunk. I knew that AWS does a bunch of validation when you create an event subscription in S3 or an SQS subscription in SNS. One thing AWS doesn’t validate is if S3 has permission to send encrypted messages to SQS - i.e., if S3 is allowed to call kms:Encrypt and kms:GenerateDataKey on the key. If that’s missing, you end up in a situation where all configuration seems fine, without anything being delivered.

Eventually, I remembered that I had a similar issue years ago, and all I needed to do was allow the S3 service principal to call the aforementioned KMS APIs. At least I’m not the only one who seems to be running into this, as this topic is part of the troubleshooting steps on re:Post. After adding the service principal, everything ran smoothly and logs showed up in Splunk.

This post is just me trying to make sure I don’t have to relearn this again. Once I write things down, it tends to stick.